By Ian Tran

Recent advancements in AI have been taking the world by storm. In particular, OpenAI’s revolutionary language model, ChatGPT, is being used extensively by individuals and businesses alike, disrupting multiple industries including healthcare, customer service and finance. For example, it’s latest version, GPT-4, is being used as:

• An in-house search assistant at Morgan Stanley, helping advisors scan through thousands of pages of market research commentary, investment strategies and analyst insights, to find answers to specific questions;

• A personal tutor for students at Khan Academy, answering student’s questions constructively and tailored to them;

• A support assistant for the payment processing company, Stripe, by reviewing client’s websites, troubleshooting issues, answering technical questions and detecting fraud on community platforms.

Other technology powerhouses, such as IBM and Google, are also introducing natural language processing (NLP) models that are seeing great success commercially. As NLP capabilities advance and become more widespread, we begin to question how we should adapt to this paradigm shift and whether it will eventually replace our jobs.

To understand the capabilities and limitations of ChatGPT, we must first understand how the technology works.

GPT, short for “Generative Pre-trained Transformer”, is a language model that generates human-like language based on a vast amount of patterns and information on which it has been pre-trained. This means it will not learn from user input data, and its knowledge is limited to the textual data it was taught, up to September 2021. Its latest version, GPT4, is capable of interpreting multi-modal inputs, accepting both image and text, and creating more use cases over its text-only predecessor, GPT 3.5.

Deep dive – Transformers

In the past, the way we used deep learning to understand text was through Recurrent Neural Networks (RNN), which would sequentially model each word and remember the context of previous words. However, they were limited in their ability to process long sequences due to the sequential nature of their computation, which made parallelization difficult.

What separates GPT from other NLP models is the “Transformer” neural network architecture. The breakthrough of the transformer is that its sequence processing is highly parallelizable. This meant that processing could be sped up with more processing power, therefore the model can be trained on much larger amounts of data.

Another unique advantage transformers have is that it employs self-attention.

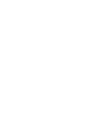

The self-attention mechanism allows the model focus on specific parts of the input data when making predictions or decisions, taking into account the context in which the word is used.

To understand this, imagine you are trying to understand a sentence in a foreign language. You might focus on certain words that you recognise or think are important, and ignore others. When processing input data, the attention mechanism allows the neural network to assign different levels of importance to different parts of the data. It can then use this information to make more accurate predictions or decisions.

For example, in the French to English translation below, to translate the output word “European” the model focused on the words économique and européenne.

Taken from “Attention is all we need” (Vaswani et al., 2017)

Limitations

ChatGPT, like all NLP models, is limited by the quality and quantity of the data it has been trained on. If the model has not been trained on a diverse and representative dataset, it may not be able to generate accurate responses to inputs that are outside of its training data.

Since offshore wind foundation design is an incredibly niche and rapidly progressing industry, there is not a large amount of accurate data online before September 2021, so many of the responses ChatGPT provides are outdated or incorrect.

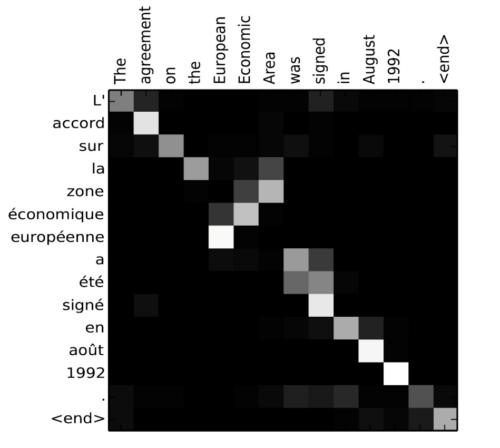

For example, GPT3.5 failed to identify that concrete is an excellent option as a corrosion resistant material for the External Working Platform (EWP), despite being common knowledge in the industry. It even suggested fibre reinforced plastic, which has never been seen in the industry.

As a “generative model”, the main goal of GPT is to generate a natural sounding response, and will struggle to deal with large complicated systems, such as complex maths questions, or generating multiple lines of code with nested loops or complex logic. Therefore, its uses in Offshore Wind, an engineering-heavy industry, are limited. The base version of GPT4 can be utilised to enhance productivity and inspire creativity in the following ways:

• Report writing

• Accurate translation

• Writing snippets of code and debugging

However, with integration and further supervised training, GPT can become a powerful and accurate tool that can have many potential uses in offshore wind:

• A search assistant that allows users to easily locate previous technical data, specific standards, safety procedures or even summarise key findings by training it on data from documents in various formats (PDF, engineering drawings, etc.)

• A training tool by creating customised assessments and providing constructive feedback

• A customer service assistant that can answer client queries (up to an extent) and provide guidance on how to use services effectively.

While powerful, ChatGPT’s current level of reasoning and technical accuracy is not sufficient to completely replace human roles in certain contexts. Outside the world of NLP however, other AI technology has been challenging the boundaries in offshore operations & maintenance.

– Machine learning algorithms, initially trained on historical data, are used to identify yaw misalignment and pitch bearing health conditions by analysing the data collected from the sensors already installed on the turbine.

– DNV, University of Bristol and Perceptual Robotics developed a fully automated system that detects surface defects on wind turbines, captures images and incorporates the data into the data-processing pipeline. This reduced the data review turnaround time by 27 percent and was 14 percent more accurate at detecting faults, compared to humans.

– Autonomous underwater vehicles (AUVs) are being introduced to reduce costs of complex cable and foundation survey projects. Companies such as Rovco have invested four years in developing a machine learning system that can recognize and identify underwater objects, allowing AUVs to perform surveys and make real-time decisions, including the generation of 3D reconstructions of potential hazards.

Discussion and Conclusion

GPT is unlikely to completely replace our roles in offshore wind within the next decade. Its technical accuracy cannot be relied upon, though if trained it can be a powerful tool to assist our jobs.

AI can and has been used to take on more complex tasks, such as surveying and processing the surveyed data. In the future, if trained on enough data and the input parameters sufficiently defined, we may see an AI powered tool design an entire foundation. However, we are a while away due to the limited availability of structured data on foundation design that is easily accessible.

In addition to technical challenges, the use of AI in designing offshore wind foundations raises significant moral and legal concerns. Specifically, there is the issue of how much trust can be placed in an AI-generated design.

Moreover, determining liability in the event of design failure becomes a critical issue. These concerns highlight the need for careful consideration and regulation of AI technology in offshore wind design, and emphasise the importance of human expertise in ensuring the safety and reliability of these structures.

In conclusion, NLP and machine learning should not be seen as a threat to our jobs, but rather embraced as complementary resources that help us more effectively and efficiently.

Now, try guessing which parts of this article was written by ChatGPT.

Empire specialists can effectively and efficiently assist with your offshore wind project. To find out more, please Get in touch with the team at Empire Engineering.